Ph.D. course announcement: Optimisation algorithms in Statistics I (4 HEC)

SummaryOptimisation (computation of minima or maxima) is frequently needed in statistics. Maximum likelihood estimates, optimal experimental designs, risk minimization in decision theoretic models are examples where solutions of optimisation problems usually do not have a closed form but need to be computed numerically with an algorithm. Moreover, the field of machine learning depends on optimisation and has new demands on algorithms for computation of minima and maxima.

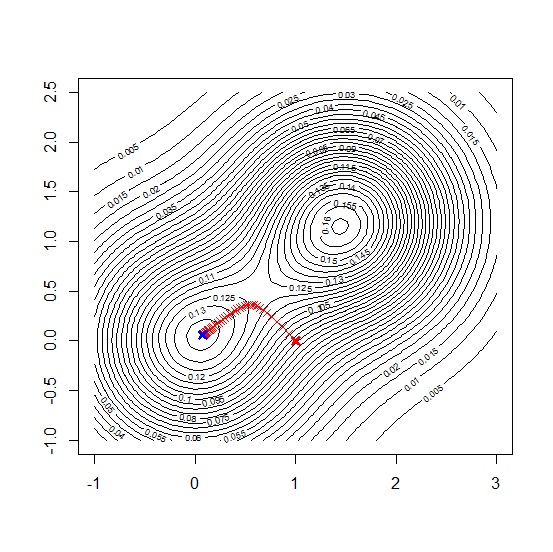

In this course, we will start with discussing properties of gradient based algorithms like the Newton method and the gradient descent method. We will then look in developments especially triggered by machine learning and discuss stochastic gradient based methods. Recent developments recognised the value of gradient free algorithms and we will consider so-called metaheuristic algorithms, e.g. particle swarm optimisation. The last topic of the course will deal with handling of restrictions during optimisation like equality and inequality restrictions.

We will implement these algorithms in R. Examples from machine learning and optimal design will illustrate the methods.

Most welcome to the course!

Frank Miller, Department of Statistics, Stockholm University

Course homepage

The course homepage is at http://gauss.stat.su.se/phd/oasi/

Prerequisites

Prerequisites

The course is intended for Ph.D. students from Statistics or a related field (e.g. Mathematical Statistics, Engineering Science, Quantitative Finance, Computer Science). Previous knowledge in the following is expected:

- familiarity with R (or another programming language with similar possibilities),

- basic knowledge in multivariate calculus (e.g. from a Multivariate Statistics course),

- statistical inference (e.g. from a Master's level course).

The course is graded Pass or Fail. Examination is through individual home assignments on problems for each topic and through a concluding mini project.

Course literatureWe will not use a central course book. Several articles, book chapters and other learning resources will be recommended.

Course structureThe course is divided into four topics. The topics will be discussed during online meetings with Zoom. Course participants will spend most of their study time by solving the problem sets for each topic on their own computers without supervision. The course will be held in October and November 2020.

A second part of the course with a deeper focus on theoretical foundations (Optimisation algorithms in statistics II, 3.5 HEC) will be offered in Spring 2021.

Course schedule- Topic 1: Gradient based algorithms

Lectures: October 2; Time 10-12, 13-15 (online, Zoom) - Topic 2: Stochastic gradient based algorithms

Lecture: October 13; Time: 9-12 (online, Zoom) - Topic 3: Gradient free algorithms

Lecture: October 23; Time 9-12 (online, Zoom) - Topic 4: Optimisation with restrictions

Lecture: November 6, Time 9-12 (online, Zoom)

To register for the course, please send an email to me (frank.miller at stat.su.se) until September 18, 2020. You are also welcome for any questions related to the course.